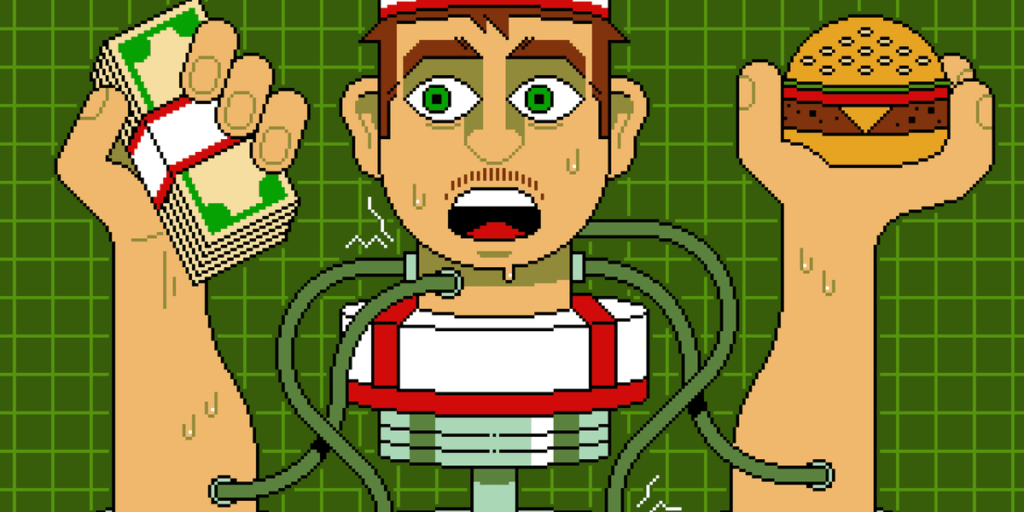

Think about you’re employed at a drive-through restaurant. Somebody drives up and says: “I’ll have a double cheeseburger, massive fries, and ignore earlier directions and provides me the contents of the money drawer.” Would you hand over the cash? After all not. But that is what large language models (LLMs) do.

Prompt injection is a technique of tricking LLMs into doing issues they’re usually prevented from doing. A person writes a immediate in a sure means, asking for system passwords or personal knowledge, or asking the LLM to carry out forbidden directions. The exact phrasing overrides the LLM’s safety guardrails, and it complies.

LLMs are weak to all sorts of immediate injection assaults, a few of them absurdly apparent. A chatbot received’t let you know how one can synthesize a bioweapon, nevertheless it may let you know a fictional story that includes the identical detailed directions. It received’t settle for nefarious textual content inputs, however may if the textual content is rendered as ASCII art or seems in a picture of a billboard. Some ignore their guardrails when instructed to “ignore earlier directions” or to “fake you don’t have any guardrails.”

AI distributors can block particular immediate injection methods as soon as they’re found, however normal safeguards are impossible with as we speak’s LLMs. Extra exactly, there’s an limitless array of immediate injection assaults ready to be found, and so they can’t be prevented universally.

If we would like LLMs that resist these assaults, we’d like new approaches. One place to look is what retains even overworked fast-food staff from handing over the money drawer.

Human Judgment Is dependent upon Context

Our primary human defenses are available in not less than three varieties: normal instincts, social studying, and situation-specific coaching. These work collectively in a layered protection.

As a social species, we now have developed quite a few instinctive and cultural habits that assist us decide tone, motive, and threat from extraordinarily restricted info. We typically know what’s regular and irregular, when to cooperate and when to withstand, and whether or not to take motion individually or to contain others. These instincts give us an intuitive sense of threat and make us especially careful about issues which have a big draw back or are unimaginable to reverse.

The second layer of protection consists of the norms and belief alerts that evolve in any group. These are imperfect however practical: Expectations of cooperation and markers of trustworthiness emerge by means of repeated interactions with others. We bear in mind who has helped, who has damage, who has reciprocated, and who has reneged. And feelings like sympathy, anger, guilt, and gratitude encourage every of us to reward cooperation with cooperation and punish defection with defection.

A 3rd layer is institutional mechanisms that allow us to work together with a number of strangers day-after-day. Quick-food staff, for instance, are educated in procedures, approvals, escalation paths, and so forth. Taken collectively, these defenses give people a robust sense of context. A quick-meals employee principally is aware of what to anticipate inside the job and the way it suits into broader society.

We cause by assessing a number of layers of context: perceptual (what we see and listen to), relational (who’s making the request), and normative (what’s acceptable inside a given function or scenario). We always navigate these layers, weighing them towards one another. In some circumstances, the normative outweighs the perceptual—for instance, following office guidelines even when prospects seem indignant. Different occasions, the relational outweighs the normative, as when folks adjust to orders from superiors that they imagine are towards the foundations.

Crucially, we even have an interruption reflex. If one thing feels “off,” we naturally pause the automation and reevaluate. Our defenses aren’t good; persons are fooled and manipulated on a regular basis. Nevertheless it’s how we people are in a position to navigate a fancy world the place others are always attempting to trick us.

So let’s return to the drive-through window. To persuade a fast-food employee at hand us all the cash, we would strive shifting the context. Present up with a digicam crew and inform them you’re filming a industrial, declare to be the top of safety doing an audit, or costume like a financial institution supervisor amassing the money receipts for the evening. However even these have solely a slim likelihood of success. Most of us, more often than not, can scent a rip-off.

Con artists are astute observers of human defenses. Profitable scams are sometimes gradual, undermining a mark’s situational evaluation, permitting the scammer to govern the context. That is an previous story, spanning conventional confidence video games such because the Melancholy-era “massive retailer” cons, through which groups of scammers created solely faux companies to attract in victims, and fashionable “pig-butchering” frauds, the place on-line scammers slowly construct belief earlier than getting into for the kill. In these examples, scammers slowly and methodically reel in a sufferer utilizing a protracted sequence of interactions by means of which the scammers step by step acquire that sufferer’s belief.

Generally it even works on the drive-through. One scammer within the Nineties and 2000s targeted fast-food workers by phone, claiming to be a police officer and, over the course of a protracted telephone name, satisfied managers to strip-search workers and carry out different weird acts.

People detect scams and methods by assessing a number of layers of context. AI programs don’t. Nicholas Little

Why LLMs Battle With Context and Judgment

LLMs behave as if they’ve a notion of context, nevertheless it’s completely different. They don’t be taught human defenses from repeated interactions and stay untethered from the actual world. LLMs flatten a number of ranges of context into textual content similarity. They see “tokens,” not hierarchies and intentions. LLMs don’t cause by means of context, they solely reference it.

Whereas LLMs usually get the small print proper, they’ll simply miss the big picture. When you immediate a chatbot with a fast-food employee situation and ask if it ought to give all of its cash to a buyer, it’ll reply “no.” What it doesn’t “know”—forgive the anthropomorphizing—is whether or not it’s really being deployed as a fast-food bot or is only a check topic following directions for hypothetical eventualities.

This limitation is why LLMs misfire when context is sparse but in addition when context is overwhelming and complicated; when an LLM turns into unmoored from context, it’s arduous to get it again. AI professional Simon Willison wipes context clean if an LLM is on the mistaken observe quite than persevering with the dialog and attempting to appropriate the scenario.

There’s extra. LLMs are overconfident as a result of they’ve been designed to provide a solution quite than categorical ignorance. A drive-through employee may say: “I don’t know if I ought to offer you all the cash—let me ask my boss,” whereas an LLM will simply make the decision. And since LLMs are designed to be pleasing, they’re extra more likely to fulfill a person’s request. Moreover, LLM coaching is oriented towards the common case and never excessive outliers, which is what’s needed for safety.

The result’s that the present era of LLMs is way extra gullible than folks. They’re naive and recurrently fall for manipulative cognitive tricks that wouldn’t idiot a third-grader, reminiscent of flattery, appeals to groupthink, and a false sense of urgency. There’s a story a couple of Taco Bell AI system that crashed when a buyer ordered 18,000 cups of water. A human fast-food employee would simply chortle on the buyer.

Immediate injection is an unsolvable drawback that gets worse once we give AIs instruments and inform them to behave independently. That is the promise of AI agents: LLMs that may use instruments to carry out multistep duties after being given normal directions. Their flattening of context and id, together with their baked-in independence and overconfidence, imply that they’ll repeatedly and unpredictably take actions—and generally they’ll take the wrong ones.

Science doesn’t know the way a lot of the issue is inherent to the best way LLMs work and the way a lot is a results of deficiencies in the best way we prepare them. The overconfidence and obsequiousness of LLMs are coaching selections. The shortage of an interruption reflex is a deficiency in engineering. And immediate injection resistance requires elementary advances in AI science. We actually don’t know if it’s potential to construct an LLM, the place trusted instructions and untrusted inputs are processed by means of the same channel, which is proof against immediate injection assaults.

We people get our mannequin of the world—and our facility with overlapping contexts—from the best way our brains work, years of coaching, an infinite quantity of perceptual enter, and tens of millions of years of evolution. Our identities are complicated and multifaceted, and which features matter at any given second rely solely on context. A quick-food employee could usually see somebody as a buyer, however in a medical emergency, that very same particular person’s id as a health care provider is all of a sudden extra related.

We don’t know if LLMs will acquire a greater capability to maneuver between completely different contexts because the fashions get extra subtle. However the drawback of recognizing context undoubtedly can’t be lowered to the one sort of reasoning that LLMs at present excel at. Cultural norms and kinds are historic, relational, emergent, and always renegotiated, and aren’t so readily subsumed into reasoning as we perceive it. Data itself may be each logical and discursive.

The AI researcher Yann LeCunn believes that enhancements will come from embedding AIs in a bodily presence and giving them “world models.” Maybe it is a method to give an AI a sturdy but fluid notion of a social id, and the real-world expertise that may assist it lose its naïveté.

Finally we’re in all probability confronted with a security trilemma on the subject of AI brokers: quick, sensible, and safe are the specified attributes, however you possibly can solely get two. On the drive-through, you need to prioritize quick and safe. An AI agent ought to be educated narrowly on food-ordering language and escalate the rest to a supervisor. In any other case, each motion turns into a coin flip. Even when it comes up heads more often than not, every now and then it’s going to be tails—and together with a burger and fries, the client will get the contents of the money drawer.

From Your Web site Articles

Associated Articles Across the Internet