Since 2018, the consortium MLCommons has been working a kind of Olympics for AI coaching. The competitors, referred to as MLPerf, consists of a set of duties for coaching particular AI models, on predefined datasets, to a sure accuracy. Primarily, these duties, referred to as benchmarks, check how properly a {hardware} and low-level software program configuration is about as much as prepare a selected AI mannequin.

Twice a 12 months, firms put collectively their submissions—normally, clusters of CPUs and GPUs and software program optimized for them—and compete to see whose submission can prepare the fashions quickest.

There isn’t a query that since MLPerf’s inception, the cutting-edge {hardware} for AI coaching has improved dramatically. Over time, Nvidia has released four new generations of GPUs which have since change into the trade customary (the newest, Nvidia’s Blackwell GPU, just isn’t but customary however rising in reputation). The businesses competing in MLPerf have additionally been utilizing bigger clusters of GPUs to sort out the coaching duties.

Nonetheless, the MLPerf benchmarks have additionally gotten harder. And this elevated rigor is by design—the benchmarks try to maintain tempo with the trade, says David Kanter, head of MLPerf. “The benchmarks are supposed to be consultant,” he says.

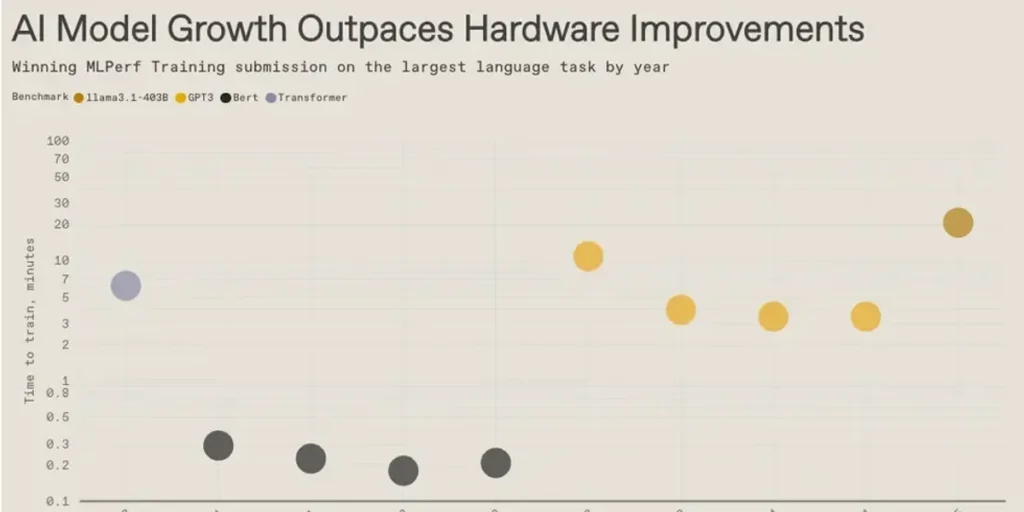

Intriguingly, the information present that the large language models and their precursors have been rising in dimension sooner than the {hardware} has saved up. So every time a brand new benchmark is launched, the quickest coaching time will get longer. Then, {hardware} enhancements progressively carry the execution time down, solely to get thwarted once more by the subsequent benchmark. Then the cycle repeats itself.

From Your Website Articles

Associated Articles Across the Internet