Zoe KleinmanKnow-how editor

Zoe KleinmanKnow-how editor BBC

BBCMark Zuckerberg is alleged to have began work on Koolau Ranch, his sprawling 1,400-acre compound on the Hawaiian island of Kauai, way back to 2014.

It’s set to incorporate a shelter, full with its personal vitality and meals provides, although the carpenters and electricians engaged on the positioning had been banned from speaking about it by non-disclosure agreements, in line with a report by Wired journal. A six-foot wall blocked the undertaking from view of a close-by street.

Requested final yr if he was making a doomsday bunker, the Fb founder gave a flat “no”. The underground house spanning some 5,000 sq. ft is, he defined, is “identical to somewhat shelter, it is like a basement”.

That hasn’t stopped the hypothesis – likewise about his resolution to purchase 11 properties within the Crescent Park neighbourhood of Palo Alto in California, apparently including a 7,000 sq. ft underground house beneath.

Although his constructing permits seek advice from basements, in line with the New York Instances, a few of his neighbours name it a bunker. Or a billionaire’s bat cave.

Bloomberg by way of Getty Pictures

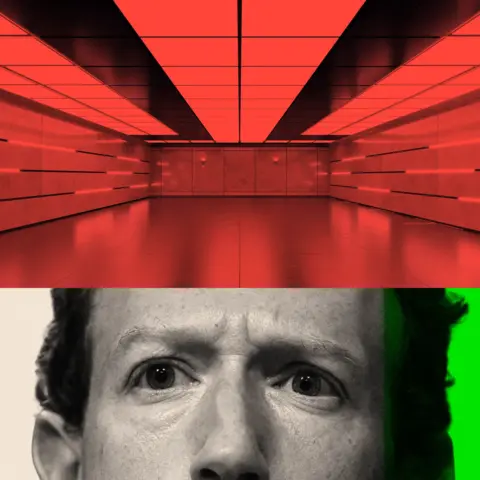

Bloomberg by way of Getty PicturesThen there’s the hypothesis round different Silicon Valley billionaires, a few of whom seem to have been busy shopping for up chunks of land with underground areas, ripe for conversion into multi-million pound luxurious bunkers.

Reid Hoffman, the co-founder of LinkedIn, has talked about “apocalypse insurance coverage”. That is one thing about half of the super-wealthy have, he has beforehand claimed, with New Zealand a well-liked vacation spot for properties.

So, might they actually be getting ready for battle, the consequences of local weather change, or another catastrophic occasion the remainder of us have but to learn about?

Getty Pictures

Getty PicturesIn the previous few years, the development of synthetic intelligence (AI) has solely added to that checklist of potential existential woes. Many are deeply apprehensive on the sheer pace of the development.

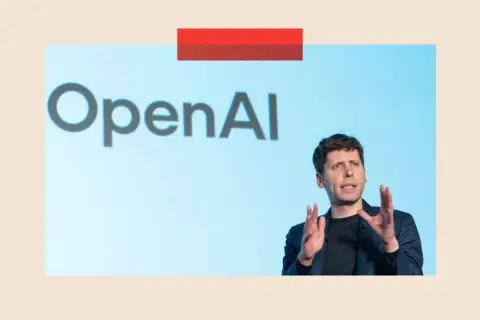

Ilya Sutskever, chief scientists and a co-founder of the know-how firm Open AI, is reported to be one them.

By mid-2023, the San Francisco-based agency had launched ChatGPT – the chatbot now utilized by a whole bunch of hundreds of thousands of individuals internationally – they usually had been working quick on updates.

However by that summer season, Mr Sutskever was turning into more and more satisfied that pc scientists had been on the point of growing synthetic basic intelligence (AGI) – the purpose at which machines match human intelligence – in line with a e-book by journalist Karen Hao.

In a gathering, Mr Sutskever prompt to colleagues that they need to dig an underground shelter for the corporate’s high scientists earlier than such a strong know-how was launched on the world, Ms Hao reviews.

“We’re positively going to construct a bunker earlier than we launch AGI,” he is broadly reported to have mentioned, although it is unclear who he meant by “we”.

AFP by way of Getty Pictures

AFP by way of Getty PicturesIt sheds gentle on an odd reality: many main pc scientists who’re working onerous to develop a massively clever type of AI, additionally appear deeply afraid of what it might at some point do.

So when precisely – if ever – will AGI arrive? And will it actually show transformational sufficient to make odd folks afraid?

An arrival ‘ahead of we predict’

Tech billionaires have claimed that AGI is imminent. OpenAI boss Sam Altman mentioned in December 2024 that it’ll come “ahead of most individuals on this planet assume”.

Sir Demis Hassabis, the co-founder of DeepMind, has predicted within the subsequent 5 to 10 years, whereas Anthropic founder Dario Amodei wrote final yr that his most popular time period – “{powerful} AI” – might be with us as early as 2026.

Others are doubtful. “They transfer the goalposts on a regular basis,” says Dame Wendy Corridor, professor of pc science at Southampton College. “It relies upon who you discuss to.” We’re on the cellphone however I can virtually hear the eye-roll.

“The scientific neighborhood says AI know-how is superb,” she provides, “nevertheless it’s nowhere close to human intelligence.”

There would should be a variety of “basic breakthroughs” first, agrees Babak Hodjat, chief know-how officer of the tech agency Cognizant.

What’s extra, it is unlikely to reach as a single second. Somewhat, AI is a quickly advancing know-how, it is on a journey and there are lots of firms world wide racing to develop their very own variations of it.

However one motive the concept excites some in Silicon Valley is that it is considered a pre-cursor to one thing much more superior: ASI, or synthetic tremendous intelligence – tech that surpasses human intelligence.

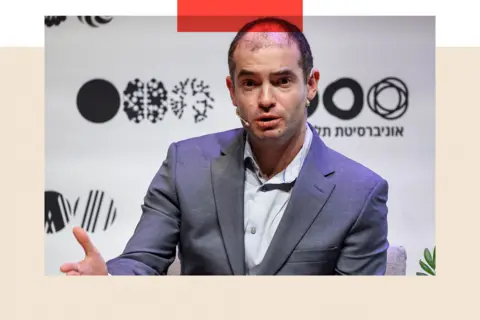

It was again in 1958 that the idea of “the singularity” was attributed posthumously to Hungarian-born mathematician John von Neumann. It refers back to the second when pc intelligence advances past human understanding.

Getty Pictures

Getty PicturesExtra just lately, the 2024 e-book Genesis, written by Eric Schmidt, Craig Mundy and the late Henry Kissinger, explores the concept of a super-powerful know-how that turns into so environment friendly at decision-making and management we find yourself handing management to it fully.

It is a matter of when, not if, they argue.

Cash for all, with no need a job?

These in favour of AGI and ASI are virtually evangelical about its advantages. It should discover new cures for lethal ailments, remedy local weather change and invent an inexhaustible provide of unpolluted vitality, they argue.

Elon Musk has even claimed that super-intelligent AI might usher in an period of “common excessive earnings”.

He just lately endorsed the concept AI will turn into so low cost and widespread that nearly anybody will need their “personal private R2-D2 and C-3PO” (referencing the droids from Star Wars).

“Everybody could have the perfect medical care, meals, dwelling transport and every little thing else. Sustainable abundance,” he enthused.

AFP by way of Getty Pictures

AFP by way of Getty PicturesThere’s a scary aspect, in fact. May the tech be hijacked by terrorists and used as an infinite weapon, or what if it decides for itself that humanity is the reason for the world’s issues and destroys us?

“If it is smarter than you, then now we have to maintain it contained,” warned Tim Berners Lee, creator of the World Broad Net, speaking to the BBC earlier this month.

“We now have to have the ability to swap it off.”

Getty Pictures

Getty PicturesGovernments are taking some protecting steps. Within the US, the place many main AI firms are based mostly, President Biden handed an govt order in 2023 that required some corporations to share security take a look at outcomes with the federal authorities – although President Trump has since revoked a number of the order, calling it a “barrier” to innovation.

In the meantime within the UK, the AI Security Institute – a government-funded analysis physique – was arrange two years in the past to raised perceive the dangers posed by superior AI.

After which there are these super-rich with their very own apocalypse insurance policy.

“Saying you are ‘shopping for a home in New Zealand’ is type of a wink, wink, say no extra,” Reid Hoffman beforehand mentioned. The identical presumably goes for bunkers.

However there is a distinctly human flaw.

I as soon as met a former bodyguard of 1 billionaire along with his personal “bunker”, who informed me his safety group’s first precedence, if this actually did occur, could be to remove mentioned boss and get within the bunker themselves. And he did not appear to be joking.

Is all of it alarmist nonsense?

Neil Lawrence is a professor of machine studying at Cambridge College. To him, this complete debate in itself is nonsense.

“The notion of Synthetic Basic Intelligence is as absurd because the notion of an ‘Synthetic Basic Automobile’,” he argues.

“The correct automobile depends on the context. I used an Airbus A350 to fly to Kenya, I take advantage of a automobile to get to the college every day, I stroll to the cafeteria… There is not any automobile that might ever do all of this.”

For him, discuss AGI is a distraction.

Smith Assortment/Gado/Getty Pictures

Smith Assortment/Gado/Getty Pictures“The know-how now we have [already] constructed permits, for the primary time, regular folks to straight discuss to a machine and doubtlessly have it do what they intend. That’s completely extraordinary… and totally transformational.

“The massive fear is that we’re so drawn in to large tech’s narratives about AGI that we’re lacking the methods during which we have to make issues higher for folks.”

Present AI instruments are educated on mountains of information and are good at recognizing patterns: whether or not tumour indicators in scans or the phrase more than likely to come back after one other in a selected sequence. However they don’t “really feel”, nonetheless convincing their responses could seem.

“There are some ‘cheaty’ methods to make a Giant Language Mannequin (the muse of AI chatbots) act as if it has reminiscence and learns, however these are unsatisfying and fairly inferior to people,” says Mr Hodjat.

Vince Lynch, CEO of the California-based IV.AI, can be cautious of overblown declarations about AGI.

“It is nice advertising and marketing,” he says “In case you are the corporate that is constructing the neatest factor that is ever existed, persons are going to need to offer you cash.”

He provides, “It is not a two-years-away factor. It requires a lot compute, a lot human creativity, a lot trial and error.”

Requested whether or not he believes AGI will ever materialise, there is a lengthy pause.

“I actually do not know.”

Intelligence with out consciousness

In some methods, AI has already taken the sting over human brains. A generative AI software could be an knowledgeable in medieval historical past one minute and remedy complicated mathematical equations the following.

Some tech firms say they do not all the time know why their merchandise reply the way in which they do. Meta says there are some indicators of its AI techniques bettering themselves.

Getty Pictures Information

Getty Pictures InformationFinally, although, regardless of how clever machines turn into, biologically the human mind nonetheless wins.

It has about 86 billion neurons and 600 trillion synapses, many greater than the substitute equivalents. The mind does not have to pause between interactions, and it’s always adapting to new data.

“In the event you inform a human that life has been discovered on an exoplanet, they are going to instantly study that, and it’ll have an effect on their world view going ahead. For an LLM [Large Language Model], they are going to solely know that so long as you retain repeating this to them as a reality,” says Mr Hodjat.

“LLMs additionally shouldn’t have meta-cognition, which suggests they do not fairly know what they know. People appear to have an introspective capacity, typically known as consciousness, that enables them to know what they know.”

It’s a basic a part of human intelligence – and one that’s but to be replicated in a lab.

High image credit: The Washington Publish by way of Getty Pictures/ Getty Pictures MASTER. Lead picture reveals Mark Zuckerberg (under) and a inventory picture of an unidentified bunker in an unknown location (above)

BBC InDepth is the house on the web site and app for the perfect evaluation, with recent views that problem assumptions and deep reporting on the most important problems with the day. And we showcase thought-provoking content material from throughout BBC Sounds and iPlayer too. You may ship us your suggestions on the InDepth part by clicking on the button under.