Benchmarking large language models presents some uncommon challenges. For one, the principle goal of many LLMs is to offer compelling textual content that’s indistinguishable from human writing. And success in that job might not correlate with metrics historically used to guage processor efficiency, akin to instruction execution fee.

However there are stable causes to persevere in trying to gauge the efficiency of LLMs. In any other case, it’s unattainable to know quantitatively how a lot better LLMs have gotten over time—and to estimate after they is perhaps able to finishing substantial and helpful tasks by themselves.

Large Language Models are extra challenged by duties which have a excessive “messiness” rating.Mannequin Analysis & Menace Analysis

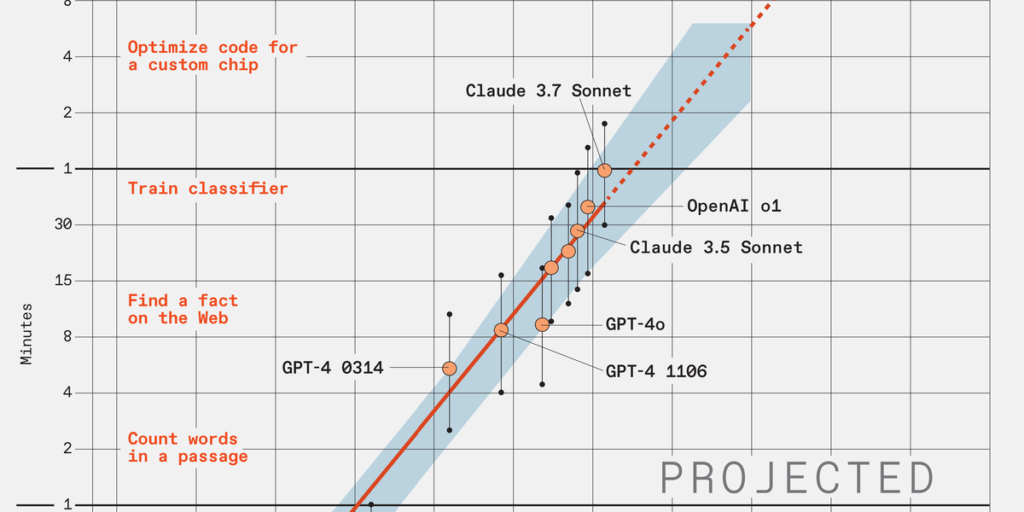

That was a key motivation behind work at Mannequin Analysis & Menace Analysis (METR). The group, based mostly in Berkeley, Calif., “researches, develops, and runs evaluations of frontier AI methods’ skill to finish advanced duties with out human enter.” In March, the group launched a paper known as Measuring AI Ability to Complete Long Tasks, which reached a startling conclusion: In response to a metric it devised, the capabilities of key LLMs are doubling each seven months. This realization results in a second conclusion, equally gorgeous: By 2030, essentially the most superior LLMs ought to be capable to full, with 50 % reliability, a software-based job that takes people a full month of 40-hour workweeks. And the LLMs would possible be capable to do many of those duties way more shortly than people, taking solely days, and even simply hours.

An LLM May Write a First rate Novel by 2030

Such duties may embrace beginning up an organization, writing a novel, or enormously enhancing an current LLM. The provision of LLMs with that form of functionality “would include huge stakes, each by way of potential advantages and potential dangers,” AI researcher Zach Stein-Perlman wrote in a blog post.

On the coronary heart of the METR work is a metric the researchers devised known as “task-completion time horizon.” It’s the period of time human programmers would take, on common, to do a job that an LLM can full with some specified diploma of reliability, akin to 50 %. A plot of this metric for some general-purpose LLMs going again a number of years [main illustration at top] exhibits clear exponential progress, with a doubling interval of about seven months. The researchers additionally thought of the “messiness” issue of the duties, with “messy” duties being people who extra resembled ones within the “actual world,” based on METR researcher Megan Kinniment. Messier duties had been more difficult for LLMs [smaller chart, above].

If the thought of LLMs enhancing themselves strikes you as having a sure singularity–robocalypse high quality to it, Kinniment wouldn’t disagree with you. However she does add a caveat: “You would get acceleration that’s fairly intense and does make issues meaningfully harder to regulate with out it essentially ensuing on this massively explosive progress,” she says. It’s fairly doable, she provides, that numerous components may gradual issues down in apply. “Even when it had been the case that we had very, very intelligent AIs, this tempo of progress may nonetheless find yourself bottlenecked on issues like {hardware} and robotics.”

From Your Website Articles

Associated Articles Across the Net